Naive Bayes Classification Algorithm in Practice

What the Naive Bayes algorithm is and how it is used in classification tasks.

Introduction

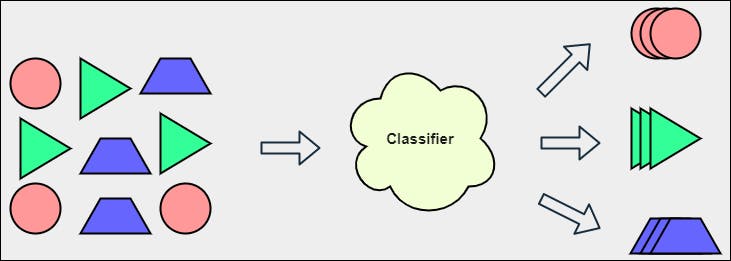

Classification is a task of grouping things together on the basis of the similarity they share with each other. It helps organize things and thus makes the study more easy and systematic. In statistics, classification refers to the problem of identifying to which set of categories an observation or data value belongs to.

Examples -

- Identifying whether an email is a spam or not spam.

- On certain parameters of health, identifying or classifying whether a patient gets a heart attack or not.

For humans, it can be very easy to do the classification task assuming that he/she has proper domain-specific knowledge and given certain features he/she can achieve it by no means. But, it can be tricky for a machine to classify - unless it is provided with proper training from the data and algorithm (classifier) that is used for learning. Thanks to Machine Learning and AI with which, now we are able to train machines with lots of data and predicting the results on unseen data.

Credits of Cover Image - Photo by Annie Spratt on Unsplash

Machine Learning

Machine Learning is a method to make machines learn from the previous experience (data) with which predictions are made on the future unseen data. These predictions may not be perfect and accurate but simply standard guesses that can be considered to make future decisions.

Since we are talking about a machine to do the classification task, luckily, over the years researchers have built various classification algorithms that can be used for our own datasets (assuming the dataset is processed enough). Some of the types of classification algorithms are -

- Logistic Regression

- Naive Bayes Classifier

- K-Nearest Neighbor (KNN)

- Decision Trees

- Neural Networks

Note - In this article, we will focus only on the Naive Bayes Classifier - algorithm.

Naive Bayes - Theory

A simple and robust classifier that belongs to the family of probabilistic classifiers. It follows the idea of the Bayes Theorem assuming that every feature is independent of every other feature. Given the categorical features (not real-valued data) along with categorical class labels, Naive Bayes computes likelihood for each category from every feature with respect to each category of class labels. Thus, it will choose a specific category of a class label whose likelihood is maximum.

Let's assume that we are given class labels such as -

$$C_k = [c_1, c_2, c_3, \dots, c_K]$$

and features such as -

$$X = [x_1, x_2, x_3, \dots, x_n]$$

with the help of the Bayes Theorem, we can have -

$$P(C_k | X) = \frac{P(C_k) P(X | C_k)}{P(X)}$$

Now, by applying chain rule and applications of conditional probability, we will get -

$$P(C_k | x_1, x_2, x_3, \dots, x_n) \propto P(C_k) \prod P(x_i | C_k) \ (i \ \text{from} \ 1 \ \text{to} \ n)$$

Clearly, the above is a probability model which can be used to construct a classifier such as -

$$\hat{y} = \text{argmax} \bigg[P(C_k) \prod P(x_i | C_k) \ (i \ \text{from} \ 1 \ \text{to} \ n) \bigg]$$

That's it. Based on this idea, the classifier predicts the category. Let's try to code this from scratch.

Naive Bayes - Code

For the practice of Naive Bayes, we shall have a processed categorical data with features and class labels to compute likelihoods. The whole process is explained below.

Data Source - bit.ly/3oZ9s83

Libraries

import pandas as pd

import numpy as np

Data Reading

data_source = 'http://archive.ics.uci.edu/ml/machine-learning-databases/car/car.data'

cdf = pd.read_csv(

filepath_or_buffer=data_source,

names=['buying','maint','doors','persons','lug_boot','safety','class'],

sep=','

)

The data is stored in the variable called cdf.

Construction

class CategoricalNB():

The name of the classifier is CategoricalNB() and it is a class where we define other methods.

def __init__(self, train_df, test_df, label):

self.X_train, self.y_train = self.split_features_targets(df=train_df, label=label)

self.X_test, self.y_test = self.split_features_targets(df=test_df, label=label)

self.X_test_vals = self.X_test.values

self.y_test_vals = self.y_test.values

self.X_likelihood, self.y_likelihood = self.compute_likelihood()

The above method is a constructor that takes three parameters such as -

train_df→ refers to the subset of the data that is used to train the classifier.test_df→ refers to the subset of the data that is used to test the classifier.label→ refers to the series of data which is actually the column name of the class label.

def split_features_targets(self, df, label):

X = df.drop(columns=[label], axis=1)

y = df[label]

return X, y

The above method is used to separate features and targets from the data. It takes two parameters such as -

df→ refers to the entire dataset that is passed for classifying.label→ refers to the series ofdfwhich is actually the column name of the class label.

def compute_likelihood(self):

X_likelihood = {}

yc_df = self.y_train.value_counts().to_frame()

yc_df.reset_index(inplace=True)

yc_df.columns = ['class', 'count']

y_vc = {i : j for (i, j) in zip(yc_df['class'], yc_df['count'])}

y_vc_k = list(y_vc.keys())

for col in self.X_train:

each_col_dict = {}

x_col_vals = self.X_train[col].value_counts().to_frame().index.to_list()

fydf = pd.DataFrame(data={col : self.X_train[col], 'y' : self.y_train})

for ex in x_col_vals:

each_x_dict = {}

x_ex_df = fydf[fydf[col] == ex]

for ey in y_vc_k:

x_y_df = x_ex_df[x_ex_df['y'] == ey]

each_x_dict[ey] = len(x_y_df) / y_vc[ey]

each_col_dict[ex] = each_x_dict

X_likelihood[col] = each_col_dict

y_likelihood = {i : j / sum(list(y_vc.values())) for (i, j) in y_vc.items()}

return X_likelihood, y_likelihood

The above method is used to compute the likelihood of each category from every feature with respect to each category of the class label. This is very useful as it is helpful to predict the category of the new data point. Here, likelihood is simply the probability - computed as per the category.

def predictor(self, X_new):

cols = list(self.X_likelihood.keys())

col_new = {i : j for (i, j) in zip(cols, X_new)}

lprobs = {}

for l, v in self.y_likelihood.items():

cate_v = [self.X_likelihood[cn][cl][l] for (cn, cl) in col_new.items()]

lprobs[l] = round((np.prod(cate_v) * v), 4)

prob_ks = list(lprobs.keys())

prob_vs = list(lprobs.values())

return prob_ks[np.argmax(prob_vs)]

The above method is used to get the specific category of a class label whose probability for the parameter X_new is maximum. Here, the parameter X_new is basically the query point for which we want the prediction.

def predict(self):

if len(self.X_test_vals) == 1:

return self.predictor(X_new=self.X_test_vals[0])

preds = [self.predictor(X_new=i) for i in self.X_test_vals]

return preds

The above method is used to predict the categories of a class label for the completely unseen dataset. This method works for both single query point or multiple query points.

def accuracy_score(self, preds):

actual_vals = np.array(self.y_test_vals)

preds = np.array(preds)

corrects = np.count_nonzero(np.where((actual_vals == preds), 1, 0))

return corrects / len(actual_vals)

The above method is used to compute the level of accuracy (a metric) to determine how the model (algorithm) is performing. It is a fraction of the total number of correctly classified data with the total number of all the data points. Generally, the model whose accuracy level is greater than 0.80 or 80 is considered to be a good model.

Full Code

class CategoricalNB():

def __init__(self, train_df, test_df, label):

self.X_train, self.y_train = self.split_features_targets(df=train_df, label=label)

self.X_test, self.y_test = self.split_features_targets(df=test_df, label=label)

self.X_test_vals = self.X_test.values

self.y_test_vals = self.y_test.values

self.X_likelihood, self.y_likelihood = self.compute_likelihood()

def split_features_targets(self, df, label):

X = df.drop(columns=[label], axis=1)

y = df[label]

return X, y

def compute_likelihood(self):

X_likelihood = {}

yc_df = self.y_train.value_counts().to_frame()

yc_df.reset_index(inplace=True)

yc_df.columns = ['class', 'count']

y_vc = {i : j for (i, j) in zip(yc_df['class'], yc_df['count'])}

y_vc_k = list(y_vc.keys())

for col in self.X_train:

each_col_dict = {}

x_col_vals = self.X_train[col].value_counts().to_frame().index.to_list()

fydf = pd.DataFrame(data={col : self.X_train[col], 'y' : self.y_train})

for ex in x_col_vals:

each_x_dict = {}

x_ex_df = fydf[fydf[col] == ex]

for ey in y_vc_k:

x_y_df = x_ex_df[x_ex_df['y'] == ey]

each_x_dict[ey] = len(x_y_df) / y_vc[ey]

each_col_dict[ex] = each_x_dict

X_likelihood[col] = each_col_dict

y_likelihood = {i : j / sum(list(y_vc.values())) for (i, j) in y_vc.items()}

return X_likelihood, y_likelihood

def predictor(self, X_new):

cols = list(self.X_likelihood.keys())

col_new = {i : j for (i, j) in zip(cols, X_new)}

lprobs = {}

for l, v in self.y_likelihood.items():

cate_v = [self.X_likelihood[cn][cl][l] for (cn, cl) in col_new.items()]

lprobs[l] = round((np.prod(cate_v) * v), 4)

prob_ks = list(lprobs.keys())

prob_vs = list(lprobs.values())

return prob_ks[np.argmax(prob_vs)]

def predict(self):

if len(self.X_test_vals) == 1:

return self.predictor(X_new=self.X_test_vals[0])

preds = [self.predictor(X_new=i) for i in self.X_test_vals]

return preds

def accuracy_score(self, preds):

actual_vals = np.array(self.y_test_vals)

preds = np.array(preds)

corrects = np.count_nonzero(np.where((actual_vals == preds), 1, 0))

return corrects / len(actual_vals)

Naive Bayes - Testing

Before testing the model, we need to first split the original data into two parts. One part is used for training and another part is used for testing. In machine learning, we generally take 80% of the data for training and the remaining 20% data for testing.

Data Splitter

def splitter(dframe, percentage=0.8, random_state=True):

if random_state:

dframe = dframe.sample(frac=1)

thresh = round(len(dframe) * percentage)

train_df = dframe.iloc[:thresh]

test_df = dframe.iloc[thresh:]

return train_df, test_df

The above function is used to divide the data into two parts.

train_df, test_df = splitter(dframe=cdf)

We have successfully divided the data into two parts.

Object Creation

nb = CategoricalNB(train_df=train_df, test_df=test_df, label='class')

Prediction

preds = nb.predict()

Accuracy Score

acc = nb.accuracy_score(preds=preds)

print(acc)

The accuracy happens to be >= 82% which is a decent percentage and hence the model is good.

Challenges

- There can be situations where we have to apply Laplace Smoothing and avoid the problems of the probability becoming

0. I have not implemented it just for now. - If we have many features in the data, we might end up getting a very small probability. This can be avoided by taking the logarithmic probabilities.

- The above implementation only works for categorical data. If we have real-valued data, it is better to proceed with the Gaussian Naive Bayes procedure.

References

- Categorical Naive Bayes - bit.ly/3uwOrTh

- Machine Learning, Shatterline blog - bit.ly/3i1gqrv

Well, that's it for now. If you have liked my article you can buy some coffee and support me here. That would motivate me to write and learn more about what I know.